#Network Bandwidth Management

Explore tagged Tumblr posts

Text

10 gig switch vs 2.5 gig vs 1 gig: Which do you need?

10 gig switch vs 2.5 gig vs 1 gig: Which do you need? @vexpert #vmwarecommunities #100daysofhomelab #homelab #NetworkSwitches #GigabitSwitch #MultiGigabitSwitches #10gigswitch #SmartManagedSwitches #UnmanagedSwitches #NetworkPerformance

There are many choices when it comes to networking in your home lab or in the enterprise. The choices range from 1 Gig switches to multi-gigabit switches that offer speeds of 2.5 Gig and even 10 Gig. These switches are the backbone of any network, whether small businesses, large enterprises, or home labs, driving connections and managing data transmission. Selecting the right switch is not just…

View On WordPress

#Bandwidth Management#Ethernet Switch#Gigabit Switch#Multi-Gigabit Switches#network performance#Network Scalability#Network Switches#Power Over Ethernet#Smart Managed Switches#Unmanaged Switches

3 notes

·

View notes

Text

What Should You Know About Edge Computing?

As technology continues to evolve, so do the ways in which data is processed, stored, and managed. One of the most transformative innovations in this space is edge computing. But what should you know about edge computing? This technology shifts data processing closer to the source, reducing latency and improving efficiency, particularly in environments where immediate action or analysis is…

#5G#AI#AI edge#AI integration#automation#autonomous vehicles#bandwidth#cloud#cloud infrastructure#cloud security#computing#computing power#data generation#data management#data processing#data storage#data transfer#decentralization#digital services#digital transformation#distributed computing#edge analytics#edge computing#edge devices#edge inferencing#edge networks#edge servers#enterprise data#Healthcare#hybrid cloud

0 notes

Text

What is a Transceiver in a Data Center? | Fibrecross

A transceiver in a data center is a device that combines the functions of transmitting and receiving data signals, playing a critical role in the networking infrastructure. Data centers are facilities that house servers, storage systems, and networking equipment to manage and process large amounts of data. To enable communication between these devices and with external networks, transceivers are used in networking equipment such as switches, routers, and servers.

Function and Purpose

Transceivers serve as the interface between networking devices and the physical medium over which data is transmitted, such as fiber optic cables or copper cables. They convert electrical signals from the equipment into optical signals for fiber optic transmission, or they adapt signals for copper-based connections, depending on the type of transceiver and network requirements.

Types of Transceivers

In data centers, transceivers come in various forms, including:

SFP (Small Form-factor Pluggable): Commonly used for 1G or 10G Ethernet connections.

QSFP (Quad Small Form-factor Pluggable): Supports higher speeds like 40G or 100G, ideal for modern data centers with high bandwidth demands.

CFP (C Form-factor Pluggable): Used for very high-speed applications, such as 100G and beyond.

These pluggable modules allow flexibility, as they can be swapped or upgraded to support different speeds, protocols (e.g., Ethernet, Fibre Channel), or media types without replacing the entire networking device.

Importance in Data Centers

Transceivers are essential for establishing physical layer connectivity—the foundation of data communication in a data center. They ensure reliable, high-speed data transfer between servers, storage systems, and external networks, which is vital for applications like cloud computing, web hosting, and data processing. In modern data centers, where scalability and performance are key, transceivers are designed to meet stringent requirements for speed, reliability, and energy efficiency.

Conclusion

In summary, a transceiver in a data center is a device that transmits and receives data signals in networking equipment, enabling communication over various network connections like fiber optics or copper cables. It is a fundamental component that supports the data center’s ability to process and share information efficiently.

Regarding the second part of the query about Tumblr blogs, it appears unrelated to the concept of a transceiver in a data center and may be a mistake or a separate statement. If you meant to ask something different, please clarify!

2 notes

·

View notes

Text

Multi-Factor, Layered, Cryptographic System

A few big flaws with crypto are; how large and unwieldy the ledger can get, the centralization of decentralized systems, congestion at the base cryptographic layer, potential loss of a wallet, automation errors, a complete lack of oversight.

Cryptographic Systems are designed to be decentralized, trustless, transactional, and secure.

The problem with this; is as the Crypto environment grew; the need to bypass some of those features became a requirement.

Decentralization gave way to crypto stores and Wallet Vaults, as well as the potential for complete loss of value (despite it being an online thing), and introducing waste to the ledger through these lost "Resources".

Trustless gave way to legal restrictions and disputes, and the formerly decentralized environment was tethered to the world economy.

Transactional gave way to inflation when It became a valued asset that was used for more than secure transactions.

And... Block chain technology has proven to be very insecure and exploitable. With several Bitcoin "Branches" being made after large thefts or errors had occured.

The Automation and Scripting layer of crypto also has potential for "Unchecked Run times" and wasted Network Cycles and Waste of Electric Power.

Needless to say; Crypto no longer serves its original purpose.

Despite all this; Crypto still has great potential. And our Future Internet designs should include protocols specifically to support it.

Separately from other internet traffic, but still, alongside it.

So what can we do with the tech to make it more usable..?

Well, first things first; Crypto has large waste of Power and Internet Bandwidth that needs to be addressed. And I would *suggest* a temporary ban of "Unaccountable Automated Wide-Area Systems".

Automated Wide-Area Systems can be installed on multiple computers over the internet, and because they are "trustless" are often overlooked when they waste resources.

Because of the need for Accountability of these systems on our networks; there are still opportunities for the companies which manage them. However, I disagree with the current idea of oligarchic accountability termed "Proof of Stake". (It will be clear what my suggestion for this will be by the end of the article.)

Because there's a need for these systems to be transactional; we should be wary of how "Automated Transactions" are designed. As large amounts of assets can accidentally trade hands over the span of nanoseconds.

And we still want them to be Decentralized and Secure.

The Ethereum model creates extra cryptographic layers to cover some of the weaknesses in BitCoin, and this actually allows for an illuminated solution to our "Dark Pools" in the finance sector.

And the need to reduce waste on our Electric and Network Infrastructure (especially in the case of climate change) necessitates that we know where every digital asset on such a system is accounted for.

It also means we need a method of "Historical Ledger" disposal; so that we both have long-term records AND reduce the resources required to run a cryptographic system.

Which means we'll need "Data Banks" and "Wallet Custodians". So that nothing is ever lost; while retaining the anonymity the internet provides.

Legal Restrictions here on keeping the privacy of Wallet Owners is very important. We can also *itemize* large wallets, to provide further security and privacy.

These systems can *also* be used to track firearm purchases anonymously. Which will give the tools our ATF needs to track sales, as well as provide the privacy and security that are constantly lobbied by Firearm owners.

I think that covers the *generalized* and *broad* requirements of wide-scale implementation. I figure that Crypto-Enthusiasts may have some input on the matter.

2 notes

·

View notes

Text

Top Features to Look for in Reliable Web Hosting Services in Thane, Mumbai

Choosing the right web hosting service is a critical decision for businesses aiming to establish a strong online presence. In Thane and Mumbai, where businesses are rapidly embracing digital transformation, finding reliable web hosting services can make all the difference in ensuring your website operates smoothly, securely, and efficiently. This article highlights the essential features to consider when opting for reliable web hosting services in Thane, Mumbai.

1. High Uptime Guarantee

A reliable web hosting provider should offer an uptime guarantee of at least 99.9%. High uptime ensures your website remains accessible to users at all times, avoiding disruptions that could lead to lost traffic and revenue.

2. Scalability

As your business grows, your website’s hosting needs may evolve. Opt for a hosting service that offers scalability, allowing you to upgrade resources like bandwidth, storage, and processing power without interruptions. This flexibility ensures that your website can handle increased traffic seamlessly.

3. Robust Security Features

With the rise in cyber threats, security should be a top priority when selecting a hosting provider. Look for features like SSL certificates, firewalls, regular backups, and malware protection to safeguard your website and data. Reliable providers also offer continuous monitoring to identify and address potential vulnerabilities proactively.

4. Lightning-Fast Loading Speeds

Website speed significantly impacts user experience and search engine rankings. Reliable web hosting services provide optimized infrastructure, including SSD storage and Content Delivery Networks (CDNs), to ensure your website loads quickly, even during peak traffic.

5. User-Friendly Control Panel

A user-friendly control panel, such as cPanel or Plesk, simplifies website management. It enables you to perform tasks like domain management, email setup, and file uploads with ease, even if you’re not tech-savvy.

6. 24/7 Customer Support

Responsive and dedicated customer support is a hallmark of reliable hosting services. Ensure your provider offers round-the-clock assistance through multiple channels, such as live chat, email, or phone, to address technical issues promptly.

7. Data Backup and Recovery

Frequent data backups and reliable recovery options are essential to protect your website from unexpected issues like server crashes or accidental data loss. A good hosting provider ensures your data is backed up regularly and can be restored quickly when needed.

8. Multiple Hosting Options

Different businesses have unique hosting needs. Whether you require shared hosting, VPS, dedicated servers, or cloud hosting, choose a provider that offers a range of options to suit your specific requirements.

9. Transparent Pricing

Reliable web hosting services should have clear and transparent pricing without hidden fees. Evaluate the value provided in relation to the cost, ensuring you get the features and support you need within your budget.

Why Reliable Web Hosting Matters

Opting for reliable web hosting services in Thane, Mumbai ensures your website performs optimally, delivers a seamless user experience, and supports your business’s growth. From ensuring security to providing scalability, the right hosting service acts as a strong foundation for your online presence.

Partner with Appdid Infotech

At Appdid Infotech, we specialize in delivering reliable web hosting services tailored to meet your business needs. With advanced technology, robust security, and unmatched customer support, we ensure your website stays online, secure, and fast. Contact us today to explore hosting solutions designed to propel your business forward.

2 notes

·

View notes

Text

San Antonio Website Hosting: Finding the Right Solution for Your Business

In today’s digital landscape, having a reliable web hosting provider is as crucial as a compelling website design. Whether you’re launching a personal blog, running a small business, or managing a large-scale enterprise in San Antonio, the choice of a hosting provider can make or break your online presence. For businesses in San Antonio, website hosting tailored to the needs of the local market can offer unique advantages. Here’s a comprehensive guide to understanding and selecting the best website hosting solutions in San Antonio.

What Is Website Hosting and Why Does It Matter?

Website hosting is the service that allows your website to be accessible on the internet. It involves storing your website’s files, databases, and other essential resources on a server that delivers them to users when they type your domain name into their browser.

Key factors such as uptime, speed, and security depend heavily on the hosting provider. Without reliable hosting, even the most well-designed website can fail to perform, leading to lost traffic, reduced credibility, and lower search engine rankings.

San Antonio’s Unique Needs for Website Hosting

San Antonio is a growing hub for businesses, startups, and entrepreneurs. The city’s vibrant economy and diverse industries demand hosting solutions that cater to various needs:

Local SEO Benefits: Hosting your website on servers based in or near San Antonio can improve website load times for local users, boosting your local search rankings.

Customer Support: Local hosting providers often offer faster and more personalized support, making it easier to resolve technical issues quickly.

Community-Centric Services: San Antonio businesses often benefit from hosting providers that understand the local market and tailor their offerings to the unique challenges faced by businesses in the area.

Types of Website Hosting Available in San Antonio

Shared Hosting

For small businesses or personal websites with limited traffic, shared hosting is a cost-effective option. Multiple websites share the same server resources, making it affordable but potentially slower during high-traffic periods.

VPS Hosting

Virtual Private Server (VPS) hosting offers a middle ground between shared and dedicated hosting. Your website gets its own partition on a shared server, providing better performance and more customization options.

Dedicated Hosting

This option gives you an entire server dedicated to your website. It’s ideal for high-traffic sites or those needing advanced security and performance features.

Cloud Hosting

Cloud hosting uses a network of servers to ensure high availability and scalability. It’s a flexible option for businesses expecting fluctuating traffic.

Managed Hosting

For those without technical expertise, managed hosting takes care of server management, updates, and backups, allowing you to focus on your business instead of technical maintenance.

Factors to Consider When Choosing Website Hosting in San Antonio

Performance and Uptime

Ensure your hosting provider offers at least 99.9% uptime to keep your website accessible around the clock. Fast loading times are critical for user experience and SEO rankings.

Security Features

Look for hosting providers that offer robust security measures, including SSL certificates, firewalls, DDoS protection, and regular backups.

Scalability

Your hosting solution should be able to grow with your business. Choose a provider that offers flexible plans to accommodate increased traffic and resources as needed.

Customer Support

24/7 customer support with knowledgeable staff is invaluable for resolving technical issues promptly. Many San Antonio-based hosting companies offer localized support to cater to their clients better.

Cost and Value

While affordability is important, don’t compromise on essential features. Compare the cost with the value offered, including storage, bandwidth, and additional tools like website builders or marketing integrations.

How Local Hosting Supports San Antonio Businesses

Local hosting providers understand the pulse of the San Antonio market. They can offer tailored solutions for restaurants, retail stores, service providers, and tech startups. By prioritizing local needs, such providers enable businesses to thrive in a competitive digital landscape.

Tips for Maintaining Your Hosted Website

Regular Backups: Protect your data by ensuring automatic and manual backups are part of your hosting plan.

Monitor Performance: Use tools to analyze website speed and resolve bottlenecks.

Stay Updated: Keep your website software, plugins, and security features up to date to prevent vulnerabilities.

Conclusion

Choosing the right website hosting provider in San Antonio is a critical step toward building a successful online presence. From understanding the local market to evaluating hosting types and features, there are numerous factors to consider. By selecting a reliable provider and maintaining your hosted website effectively, you can ensure your business stands out in San Antonio’s competitive digital landscape.

Whether you’re launching a new venture or upgrading your current hosting solution, San Antonio offers a wealth of hosting options to meet your unique needs. Make the smart choice today to power your online success!

2 notes

·

View notes

Text

Amazon DCV 2024.0 Supports Ubuntu 24.04 LTS With Security

NICE DCV is a different entity now. Along with improvements and bug fixes, NICE DCV is now known as Amazon DCV with the 2024.0 release.

The DCV protocol that powers Amazon Web Services(AWS) managed services like Amazon AppStream 2.0 and Amazon WorkSpaces is now regularly referred to by its new moniker.

What’s new with version 2024.0?

A number of improvements and updates are included in Amazon DCV 2024.0 for better usability, security, and performance. The most recent Ubuntu 24.04 LTS is now supported by the 2024.0 release, which also offers extended long-term support to ease system maintenance and the most recent security patches. Wayland support is incorporated into the DCV client on Ubuntu 24.04, which improves application isolation and graphical rendering efficiency. Furthermore, DCV 2024.0 now activates the QUIC UDP protocol by default, providing clients with optimal streaming performance. Additionally, when a remote user connects, the update adds the option to wipe the Linux host screen, blocking local access and interaction with the distant session.

What is Amazon DCV?

Customers may securely provide remote desktops and application streaming from any cloud or data center to any device, over a variety of network conditions, with Amazon DCV, a high-performance remote display protocol. Customers can run graphic-intensive programs remotely on EC2 instances and stream their user interface to less complex client PCs, doing away with the requirement for pricey dedicated workstations, thanks to Amazon DCV and Amazon EC2. Customers use Amazon DCV for their remote visualization needs across a wide spectrum of HPC workloads. Moreover, well-known services like Amazon Appstream 2.0, AWS Nimble Studio, and AWS RoboMaker use the Amazon DCV streaming protocol.

Advantages

Elevated Efficiency

You don’t have to pick between responsiveness and visual quality when using Amazon DCV. With no loss of image accuracy, it can respond to your apps almost instantly thanks to the bandwidth-adaptive streaming protocol.

Reduced Costs

Customers may run graphics-intensive apps remotely and avoid spending a lot of money on dedicated workstations or moving big volumes of data from the cloud to client PCs thanks to a very responsive streaming experience. It also allows several sessions to share a single GPU on Linux servers, which further reduces server infrastructure expenses for clients.

Adaptable Implementations

Service providers have access to a reliable and adaptable protocol for streaming apps that supports both on-premises and cloud usage thanks to browser-based access and cross-OS interoperability.

Entire Security

To protect customer data privacy, it sends pixels rather than geometry. To further guarantee the security of client data, it uses TLS protocol to secure end-user inputs as well as pixels.

Features

In addition to native clients for Windows, Linux, and MacOS and an HTML5 client for web browser access, it supports remote environments running both Windows and Linux. Multiple displays, 4K resolution, USB devices, multi-channel audio, smart cards, stylus/touch capabilities, and file redirection are all supported by native clients.

The lifecycle of it session may be easily created and managed programmatically across a fleet of servers with the help of DCV Session Manager. Developers can create personalized Amazon DCV web browser client applications with the help of the Amazon DCV web client SDK.

How to Install DCV on Amazon EC2?

Implement:

Sign up for an AWS account and activate it.

Open the AWS Management Console and log in.

Either download and install the relevant Amazon DCV server on your EC2 instance, or choose the proper Amazon DCV AMI from the Amazon Web Services Marketplace, then create an AMI using your application stack.

After confirming that traffic on port 8443 is permitted by your security group’s inbound rules, deploy EC2 instances with the Amazon DCV server installed.

Link:

On your device, download and install the relevant Amazon DCV native client.

Use the web client or native Amazon DCV client to connect to your distant computer at https://:8443.

Stream:

Use AmazonDCV to stream your graphics apps across several devices.

Use cases

Visualization of 3D Graphics

HPC workloads are becoming more complicated and consuming enormous volumes of data in a variety of industrial verticals, including Oil & Gas, Life Sciences, and Design & Engineering. The streaming protocol offered by Amazon DCV makes it unnecessary to send output files to client devices and offers a seamless, bandwidth-efficient remote streaming experience for HPC 3D graphics.

Application Access via a Browser

The Web Client for Amazon DCV is compatible with all HTML5 browsers and offers a mobile device-portable streaming experience. By removing the need to manage native clients without sacrificing streaming speed, the Web Client significantly lessens the operational pressure on IT departments. With the Amazon DCV Web Client SDK, you can create your own DCV Web Client.

Personalized Remote Apps

The simplicity with which it offers streaming protocol integration might be advantageous for custom remote applications and managed services. With native clients that support up to 4 monitors at 4K resolution each, Amazon DCV uses end-to-end AES-256 encryption to safeguard both pixels and end-user inputs.

Amazon DCV Pricing

Amazon Entire Cloud:

Using Amazon DCV on AWS does not incur any additional fees. Clients only have to pay for the EC2 resources they really utilize.

On-site and third-party cloud computing

Please get in touch with DCV distributors or resellers in your area here for more information about licensing and pricing for Amazon DCV.

Read more on Govindhtech.com

#AmazonDCV#Ubuntu24.04LTS#Ubuntu#DCV#AmazonWebServices#AmazonAppStream#EC2instances#AmazonEC2#News#TechNews#TechnologyNews#Technologytrends#technology#govindhtech

2 notes

·

View notes

Note

Here’s the Vahn breakdown!

Circumstance, Trauma and healing.

Vahns entire life is a series of things going terribly wrong and then continuing to live whether they like it or not.

What exactly Vahn was like right before the Terrible, Horrible, No Good Day is a big blank space, so they can only really be analyzed from the time they met Trigger and onwards (with the exception of a handful of early childhood flashbacks).

For all the messiness of the two hunters relationship, I do think that on the whole Trigger was probably the best person to have found Vahn. He was never the best at helping Vahn emotionally, but Trigger’s decision making inadvertently kept Vahn safe from Interstellar for Five Years.

At face value, Andrew seems like he would have been the best person to have picked up Vahn. He’s already familiar with Vahn and the rest of the Avalons would have been an incredible support network for the freshly traumatized teen. However . That’s not taking into account Vahns new powers coupled with a dangerously unstable mental state.

We saw in a flashback the first time Vahns arms extended, they were having some kind of emotional breakdown and attacking Trigger. If Vahn did that to a member of the Avalon family, it could have gone much worse than a minor concussion for just Vahn. Andrew is freshly traumatized himself at this point which adds another volatile element to the mix. Even if everything worked out about as well as it did with Trigger, the greatest difference is that Andrew would have almost certainly brought up Vahns tattoos and powers to outside parties. Well intentioned no doubt but with what we know of Interstellar and especially Dr. Yaromir, any whiff of Vahns powers would have had Fiachra and her people kidnapping Vahn within the week.

Hell, within 24 hours of Trigger semi abandoning them, Vahns tattoos were photographed and sent to Yaromir at a random bar, five YEARS after everything went down. And the more I think about it, the more I’m amazed Trigger managed to not only hide them for so long, but keep Vahn in relatively good health too. Trigger happened to have just the right collection of contacts and paranoia to get Vahn medicine, wrapped up and off the wrong radars long enough to survive to this point.

Which is all the more important considering Vahns amnesia. Vahn regularly gets mistaken for a kid and does exhibit many childish qualities, but when you take into account they’re working with all of five years worth of memories? Yeah makes sense why they’re skewing a bit lower. Vahn doesn’t have access to their formative years so it’s a bit like trying to understand a book that’s had the first dozen chapters ripped out.

Blocking out painful memories seems to be Vahns way of dealing with trauma as well. Selective memory is a legitimate way people can deal with extremely traumatic experiences.

It happens at least twice, the first being when Vahn first got their tattoos and the world almost ending. And then again after being halfway murdered and assaulted by Aiden. At least the second time Vahn only lost a week. But while losing memories helped protect Vahns mind the first time, it left them in an extremely vulnerable state where they needed to relearn how to be a person.

On the whole, Trigger was decent about avoiding Vahns triggers (pun intended). They didn’t push about their past unnecessarily. Respected Vahns wishes to stay away from hospitals. Triggers overprotective treatment certainly stifled Vahn in the long run, but in the early days, I don’t think Vahn had the emotional bandwidth to take care of themselves.

(What makes me curious as well is why Trigger let Vahn stay for so long. Sure it’s the right thing to do, but Trigger could have just dropped them off at the nearest crisis center with some spare clothes and flown away still having done right by, what was essentially, a stranger.)

By the time of the comic, Vahns dependence on Trigger has curdled into over-dependence. Up until Raw Schism. Vahn always defers to Triggers direction, even when they disagree with him. Two scenes in particular really cement this: when Trigger locks Vahn in the bathroom during Rotten Hand Brothers, and when Trigger and Vahn shoplifted. Both times Vahn is verbally disagreeing with Trigger but still physically follows his directions. Where as by Raw Schism, Vahn has learned first and second hand that Trigger Can Be Wrong. Which is HUGE for Vahn, since that now forces them into the uncomfortable position of having to make decisions for themselves.

One of the reasons I really love Poco A Poco is that it’s basically Vahn speed running what every college freshman goes through their first time moving out from home. Gotta do all your own cooking, cleaning, maintenance etc. Most importantly, Vahn had Erich there to teach them that independence is not isolation.

Vahn and Triggers relationship has been unhealthily codependent for a long time, and what was good for them at the start isn’t what either of them need right. You can’t leave a cast on forever.

When I think about the worst case scenario between Vahns memory loss and the target of their powers, there could have been a very unpleasant timeline where Vahn was kidnapped by Interstellar much earlier on. What kind of person would that have created? If the main influences on Vahn had been Fiachra and Yaromir instead of Trigger?

Time heals all wounds, but with the wrong kind of treatment, not all wounds heal back Right.

The roads Vahn has had to face have never been easy, that's for sure, oh boy;;; All paths seem to lead to some level of pain and there's some less so than others (and we'd say you've made some excellent deductions hoho) but you're absolutely right that Vahn's journey has always been about deciding whether or not it's worth bearing to see another day.

Thank you for another juicy analysis, we loved reading this so much!!! We're floored and absolutely humbled!! 🙏✨

10 notes

·

View notes

Text

Gundam: Halo In The Stars

"It’s been 19,200 years since humanity left the cradle of Sol, riding the cosmic filaments of Stringspace, and since then, it has expanded across the breadth of the Orion, Sagittarius And Perseus Arms of the Milky Way Galaxy, yet it is still a mere island in a titanic ocean, one that itself still has unexplored jungles.

Trillions have diversified into interstellar empires, interplanetary and planetary polities, corporate colonies, and a variety of independent populations, all overseen by the Solar Lancers, a neutral paramilitary/government hybrid organization created in the aftermath of the Calamity Events, one of three different dark ages that set back human exploration and progress in the past sixteen millennium.

Holding the tens of thousands of colonies together are the mapped out Stringspace Faster-Than-Light Transit Corridors, where thousands of human ships flow through every day. And the Eidonet, a dataspace system built upon a network of high-bandwidth superluminal data transfer/unlink arrays that allow real-time communication and trade across the entire expanse of human colonized space, backed up by secondary luminal networks.

Yet order is not truly established, with wars, corpo corruption, unrest, inequality, space piracy, and tyrannic militarism popping everywhere from the Frontier Periphery to Core Sphere, and the Solar Lancers, as much it strives to help humanity, is nowhere perfect and itself has played a hand in many of these issues.

Uncanny cosmic horrors of human and unknown origin lurk in all this chaos, with humanity delving deeper and deeper into the mystifying yet also horrifying depths of reality contradicting or outright breaking potential of ever advancing Thaulogical technology.

Amongst the countless battlefields and conflicts popping up, mercenaries from any manner of background thrive, especially the pilots of the towering Mobile Suit mechs, commonly referred to as Apollyons, and their Handlers. Particularly freedom seeking individuals are overseen and managed by the independent private military contractor support organization Qixx, where freedom to do any contract or job is, no matter who is the contractor or how horrific the mission is, allowed, leading to many Qixx Apolloyons making a name for themselves as either great heroes who toppled corrupt regimes, exposed the horrific crimes of corporations, and ended bloody wars, or villainous monsters who destroyed colony habitats holding millions, sabotaged agriculture and industrial centers that lead to entire interplanetary states starving, and perpetrated endless cycles of destruction across conflict zones to line their own pockets.

Transhumanism is rampant across every part of the Human Sphere, as the need for either adaptation to the harsh environment of space and exoplanets or more ways to express one’s true self grows, though this philosophy and way of life is exploited by corrupted actors for their own self-serving needs as well.

At the core of the Human Sphere the star system of Sol remains, yet it is no bustling capital of a grand interstellar polity (at least only after two human dark ages), but merely the most largest and well-developed industrial center in human hands, where many of its people live and die without ever stepping outside the system, their homes and lives exploited and used as a resource to fuel the opulent Core Sphere and the ever expanding Outer Periphery. Their government, the Sol Administration Cadre, is merely a puppet state with no real power. Because of this, factions of revolutionists, terrorists, freedom fighters, and more who want some form of Sol Independence have formed over the millennia, uniting into the Solian Unchained, but is only recently that any of them begin making moves, ones that may have effects beyond breaking the cycle of exploitation plaguing the birthplace of humankind.

But in the middle of all this, centuries of secret planning and cascading buildup that will change the course of humanity forever breaks through the surface."

This is my little Gundam story. I've recently gotten into the mecha genre, mainly through Gundam, Lancer rpg and Armored Core, and I've found myself wanting to create a original mecha story, which now has evolved into a Gundam story, though not based in any of the canon timelines.

This is the Halo Dominus Timeline.

Its has elements inspired by stuff like Lancer, Armored Core, Gundam canon as well as original sci-fi stuff. I don't think I will ever be able to write any sorta of comprehensible story in the setting, but I just wanna to share it here.

In the setting, the Human Sphere political and social landscape is overseen by the centralized neutral entity, the Solar Lancers, regulating FTL travel and technology in order to prevent another Calamity Event, as well as curbing piracy, corruption, and human rights violation.

In theory.

In reality, the Solar Lancers are heavily decentralized and de-unified, split into many factions with differing views. Many commit or support said piracy, corruption and violation. Many exploit the organizations unbalanced control over FTL drive and technologic production and distribution to manipulate underdeveloped polities into agreements that only favor them. Many violate the sovereignty of independent worlds. And many do strive to accomplishing the original goals of the organization, yet even these groups differ in how to morally and ethically go about doing it.

Gundam's in the setting are like HORUS mech's, weird eldritch Mobile Suit's built out of technologic miracles, strange mechanical components that shouldn't be able to work but do, and Thaulogical Cores inhabited by sapient AI's, or, as they are referred as in the setting, Ontological Data Entities. Gundam's pilots become so through being chosen by these machines, forming a physical parasitic symbiote like bond that allows the pilots to 'understand' these ODE's while also ensuring that no other pilot can possibly fly the machines.

They appear out of nowhere, and none have found the source of the machines, only finding exabit data packets filled with nonsense, and manufacturing facilities that always seemed to have recently printed something.

Technology in the setting is based around gravity manipulation, AI's/ODE's, exotic metamaterials, and Anat Manipulation. The latter makes use of Anat exotic matter/particles, a particularly important handwavium material in the setting that is used as a hyper-powerful and efficient power source, a data conductor and the main fuel source of FTL drives.

More than that, it is also the main component of the practically magical Thaulogical technology, as things tend to get weird when high density Anat is manipulated in a certain way. Coral Release levels of weird.

Transhumanism is common in the setting, and plays a big part into some of the characters as well as the themes. One particularly theme is 'physical flesh, no matter how changed, does not define what is human, or more accurately, a person'

The main story within the setting as well as the characters are still really abstract, but it primarily involves Solar Lancer 344th Liberation Echelon and its employed private military contractor group Orphios getting caught in the middle of a Solar Lancer Civil War and a shdaow conflict between the remnants of incomprehensible entities pulling the strings that threatens to unravel the Human Sphere.

The main character is Sierra Kimura (She/Her), Orphios pilot of the Gundam Astraeus. (Sol-Dragon, Silver-Eyes Burning Bright, Winter Scales Coiled Around The Stars)

#mecha#gundam#lancer rpg#scifi#worldbuilding#Ireallylikemecha#sierraisalsotrans#technology#babblingaboutmyabstractscifistories

1 note

·

View note

Text

Where Can You Find the Cheapest Dedicated Server Deals in Los Angeles?

Content Table :

Introduction

Importance of Reliable and Affordable Dedicated Servers

Why Los Angeles is a Prime Location for Hosting Solutions

Key Stats and Facts About Dedicated Servers in Los Angeles

Growing Popularity

Low Latency Connections

Energy Efficiency

Cost-Effective Options

Security and Compliance

What is a Dedicated Server, and Why Do You Need One?

Definition of a Dedicated Server

Benefits of Dedicated Servers:

Performance

Customization

Security

Scalability

How to Find the Cheapest Dedicated Server Deals in Los Angeles

Step 1: Assess Your Needs

Step 2: Compare Hosting Providers

Step 3: Look for Value-Added Features

Why Atalnetworks is the Best Choice for Dedicated Servers in Los Angeles

Key Features of Atalnetworks’ Dedicated Servers:

Cost-Effective Plans

Reliable Performance

Scalability

24/7 Support

Cutting-Edge Hardware

DDoS-Protected Infrastructure

Dedicated Server Pricing Plans at Atalnetworks

ATL10 Plan

ATL100TB Plan

ATL1GUNMETERD Plan

High-End Plans

How Atalnetworks Supports LA Businesses with Cutting-Edge Solutions

Expert Support for Growing Businesses

Optimized for Local Markets

Comprehensive Hosting Services

Enhancing Your Hosting Experience with Atalnetworks

Premium Features and Reliable Performance

Customizable Hosting Plans for Businesses of All Sizes

Thriving in a Competitive Digital Landscape

Call to Action

Start Your Hosting Journey Today

Contact Atalnetworks for a Free Consultation

Read More

Are Dedicated Servers Shaping Web Hosting's Future in Singapore?

Why Tech Leaders Choose Dedicated Server Ireland: A Complete Analysis

When managing a growing business or launching a new tech startup, finding a reliable and affordable dedicated server solution in Los Angeles can be a game-changer. With the increasing demand for secure, fast, and robust hosting solutions, many companies are searching for the best dedicated server deals without breaking their budget.

Key Stats and Facts About Dedicated Servers in Los Angeles

Growing Popularity: Los Angeles is a major hub for tech innovation and media, making it one of the top cities for data center infrastructure and dedicated server hosting in the United States. Reports indicate that the data center market in Los Angeles is projected to grow at over 12% annually through 2025.

Low Latency Connections: For businesses targeting West Coast, Asia-Pacific, and even global customers, Los Angeles-based servers offer incredibly low latency due to its strategic connectivity to undersea cable routes and major internet exchanges. This ensures faster loading times and smoother user experiences.

Energy Efficiency: Many Los Angeles-based data centers lead the way in green energy use, with some facilities powered by up to 70% renewable energy sources, helping businesses reduce their carbon footprint.

Cost-Effective Options: Dedicated servers in Los Angeles can start as low as $50 per month for basic configurations, with high-performance enterprise solutions scaling upwards depending on bandwidth, storage, and customization requirements.

Security and Compliance: Data centers in Los Angeles often adhere to strict compliance standards such as ISO 27001, SOC 2, and HIPAA, ensuring robust security and reliability for businesses handling sensitive data.

These stats highlight why Los Angeles is a prime choice for dedicated server hosting, balancing performance, scalability, and value for businesses of all sizes.

If you’ve been asking yourself where you can find the cheapest dedicated server in Los Angeles, you’re in the right place. This guide will help you explore your options and introduce you to Atal networks, a provider that consistently stands out for its cost-effective and high-performance hosting services in LA.

What is a Dedicated Server, and Why Do You Need One?

A dedicated server is a hosting solution where an entire server is allocated to a single user or business. Unlike shared hosting, where resources like CPU, bandwidth, and memory are divided among multiple users, a dedicated server ensures you have full control and access to the server’s resources.

Why Choose a Dedicated Server?

Performance: Dedicated servers deliver unmatched performance, speed, and reliability.

Customization: You can customize your operating system, control panels, and software for your specific needs.

Security: With advanced protections like DDoS protection, dedicated servers provide top-notch security.

Scalability: As your business grows, you can easily upgrade to match your increasing traffic or processing needs.

Whether you’re running high-traffic websites, hosting applications, or managing large databases, a dedicated server in Los Angeles ensures your business has the infrastructure it needs to succeed.

How to Find the Cheapest Dedicated Server Deals in LA?

Finding an affordable dedicated server web hosting in Los Angeles doesn’t have to be overwhelming. Here are three steps to help you make the right decision:

1. Assess Your Needs

Before starting your search, outline your hosting requirements:

How much bandwidth do you need?

Do you require unmetered data for high-volume traffic?

What level of security is crucial for your business?

Are additional features like full root access or enterprise-grade data centers essential to your operations?

2. Compare Hosting Providers

When comparing hosting providers in Los Angeles, pay attention to:

Pricing plans and whether they match your budget.

The performance and reliability of their servers.

Transparency in costs—avoid hidden fees.

Features like 24/7 support, server customization, and cutting-edge hardware.

3. Look for Value-Added Features

Price isn’t the only factor to consider. Look for perks like:

Live chat support for fast problem resolution.

Advanced options like control panels and server monitoring.

Exclusive deals for new subscribers.

One provider offering exceptional value is Atal networks. With a variety of budget-friendly plans and reliable infrastructure, Atal networks is a go-to option for businesses and developers in Los Angeles.

Why Atalnetworks is the Best Choice for Dedicated Servers in Los Angeles

Atalnetworks offers high-performance dedicated servers designed for businesses of all sizes. With state-of-the-art features and competitive prices, they make high-quality hosting accessible to everyone.

Key Features of Atalnetworks’ Dedicated Servers:

Cost-Effective Plans: Starting at just $99/month, Atalnetworks provides affordable options tailored to your needs.

Reliable Performance: Their servers are housed in N+1 data centers in Los Angeles, ensuring low latency and minimal downtime.

Scalability: Flexible plans allow you to upgrade as your business grows.

24/7 Support: Experienced professionals are always available via live chat and email to help with any issue.

Cutting-Edge Hardware: From Intel Xeon processors to RAID storage, Atalnetworks uses the latest technology for optimal hosting solutions.

DDoS-Protected Infrastructure: Keep your data safe from cyber threats without compromising speed and performance.

Dedicated Server Pricing Plans at Atalnetworks:

1. ATL10

Price: $99/month

Xeon 4116/1230v5/2640v3 CPU

32 GB RAM

1 TB Disk

20 TB bandwidth

1 Gbps Port

2. ATL100TB

Price: $150/month

100 TB Bandwidth

32 GB RAM

DDoS-protected infrastructure

3. ATL1GUNMETERD

Price: $168/month

Unmetered data

32 GB RAM

Perfect for traffic-heavy applications

4. High-End Plans

For businesses needing more power, Atalnetworks offers dual CPU and massive storage options. Their ATL10G plan features 10G unmetered bandwidth and high-speed SSDs for $770/month.

Explore All Plans Here

With these flexible pricing options, you can easily buy a dedicated server in Los Angeles without worrying about cost.

How Atalnetworks Supports LA Businesses with Cutting-Edge Solutions

Atalnetworks is not just a hosting provider; they’re a trusted partner for businesses relying on dedicated server hosting in Los Angeles. Here’s how:

Expert Support for Growing Businesses

Their 24/7 support team ensures your hosting experience is seamless, offering guidance on setup, maintenance, and upgrades whenever needed.

Optimized for Local Markets

Their Los Angeles dedicated servers are optimized for businesses serving local audiences, ensuring quick response times and reliable connectivity.

Comprehensive Hosting Services

Atalnetworks provides a full suite of hosting services, making them a one-stop-shop for all your server and cloud needs.

If you’re looking for the most reliable dedicated server hosting solutions, don’t wait—contact our sales team today to get started.

Enhancing Your Hosting Experience with Atalnetworks

By choosing Atalnetworks, you’re not just getting a cheap server—you’re gaining access to premium features, reliable performance, and a partner dedicated to your success. Whether you're a startup, small business, or an established enterprise, their customizable hosting plans provide the flexibility and security you need to grow.

With dedicated server web hosting in Los Angeles backed by cutting-edge data center technology, Atal networks enables your business to thrive in a competitive digital landscape.

Start your hosting journey today! Contact Atal networks for a free consultation and discover the ideal server setup for your needs.

Read More: 1. Are Dedicated Servers Shaping Web Hosting's Future in Singapore? 2. Why Tech Leaders Choose Dedicated Server Ireland: A Complete Analysis

1 note

·

View note

Note

You mentioned implementing TCP on top of UDP, what does that sort of thing normally entail?

(for the record "implementing TCP on top of UDP" is not really what we want, what I specifically mentioned is "part of the functionalities TCP provides gets reimplemented on top of UDP")

Okay so this is probably my 5th rewrite of a potential reply to this, and meanwhile this ask would otherwise sit for days otherwise if I were to write a detailed breakdown of IP, TCP and UDP, so instead I'm gonna paste a quote from documentation of ENet, one of the "game networking libraries" I mentioned earlier

ENet evolved specifically as a UDP networking layer for the multiplayer first person shooter Cube. Cube necessitated low latency communication with data sent out very frequently, so TCP was an unsuitable choice due to its high latency and stream orientation. UDP, however, lacks many sometimes necessary features from TCP such as reliability, sequencing, unrestricted packet sizes, and connection management. So UDP by itself was not suitable as a network protocol either. No suitable freely available networking libraries existed at the time of ENet's creation to fill this niche. UDP and TCP could have been used together in Cube to benefit somewhat from both of their features, however, the resulting combinations of protocols still leaves much to be desired. TCP lacks multiple streams of communication without resorting to opening many sockets and complicates delineation of packets due to its buffering behavior. UDP lacks sequencing, connection management, management of bandwidth resources, and imposes limitations on the size of packets. A significant investment is required to integrate these two protocols, and the end result is worse off in features and performance than the uniform protocol presented by ENet.

(there is more behind this link)

3 notes

·

View notes

Text

800G OSFP - Optical Transceivers -Fibrecross

800G OSFP and QSFP-DD transceiver modules are high-speed optical solutions designed to meet the growing demand for bandwidth in modern networks, particularly in AI data centers, enterprise networks, and service provider environments. These modules support data rates of 800 gigabits per second (Gbps), making them ideal for applications requiring high performance, high density, and low latency, such as cloud computing, high-performance computing (HPC), and large-scale data transmission.

Key Features

OSFP (Octal Small Form-Factor Pluggable):

Features 8 electrical lanes, each capable of 100 Gbps using PAM4 modulation, achieving a total of 800 Gbps.

Larger form factor compared to QSFP-DD, allowing better heat dissipation (up to 15W thermal capacity) and support for future scalability (e.g., 1.6T).

Commonly used in data centers and HPC due to its robust thermal design and higher power handling.

QSFP-DD (Quad Small Form-Factor Pluggable Double Density):

Also uses 8 lanes at 100 Gbps each for 800 Gbps total throughput.

Smaller and more compact than OSFP, with a thermal capacity of 7-12W, making it more energy-efficient.

Backward compatible with earlier QSFP modules (e.g., QSFP28, QSFP56), enabling seamless upgrades in existing infrastructure.

Applications

Both form factors are tailored for:

AI Data Centers: Handle massive data flows for machine learning and AI workloads.

Enterprise Networks: Support high-speed connectivity for business-critical applications.

Service Provider Networks: Enable scalable, high-bandwidth solutions for telecom and cloud services.

Differences

Size and Thermal Management: OSFP’s larger size supports better cooling, ideal for high-power scenarios, while QSFP-DD’s compact design suits high-density deployments.

Compatibility: QSFP-DD offers backward compatibility, reducing upgrade costs, whereas OSFP often requires new hardware.

Use Cases: QSFP-DD is widely adopted in Ethernet-focused environments, while OSFP excels in broader applications, including InfiniBand and HPC.

Availability

Companies like Fibrecross,FS.com, and Cisco offer a range of 800G OSFP and QSFP-DD modules, supporting various transmission distances (e.g., 100m for SR8, 2km for FR4, 10km for LR4) over multimode or single-mode fiber. These modules are hot-swappable, high-performance, and often come with features like low latency and high bandwidth density.

For specific needs—such as short-range (SR) or long-range (LR) transmission—choosing between OSFP and QSFP-DD depends on your infrastructure, power requirements, and future scalability plans. Would you like more details on a particular module type or application?

2 notes

·

View notes

Text

Features of Cloud Hosting Server

Cloud hosting is a form of web hosting provider that utilises resources from more than one server to offer scalable and dependable hosting solutions. My research suggests that Bsoftindia Technologies is one of the high-quality options for you. They have provided all digital and IT services since 2008. They offer all of these facilities at a reasonable fee. Bsoftindia is a leading provider of cloud hosting solutions for businesses of all sizes. Our cloud hosting services are designed to offer scalability, flexibility, and safety, empowering businesses to perform efficiently in the digital world

FEATURES OF CLOUD SERVERS

Intel Xeon 6226R Gold Processor YFastest & Latest Processor: 2.9 GHz, Turbo Boost: 3.9 GHz NVMe Storage Micron 9300, 2000 MB/s Read/Write vs 600 MB/s in SSD 1 Gbps Bandwidth Enjoy Unlimited, BGPed, and DDOS Protected bandwidth Snapshot Backup #1 Veeam Backup & Replication Setup with guaranteed restoration Dedicated Account Manager Dedicated AM & TAM for training, instant support, and seamless experience 3 Data Center Location Choose the nearest location for the least latency 99.95% Uptime A commitment to provide 99.95% Network Uptime Tier 3 Datacenter #1 Veeam Backup & Replication Setup with guaranteed restoration

Get a free demo and learn more about our cloud hosting solutions. https://bsoft.co.in/cloud-demo/

#cloud services#cloud hosting#clouds#service#technology#marketing#cloudserver#digital marketing#delhi#bestcloudhosting#bestcloudhostingsolution

2 notes

·

View notes

Text

Trends in ICT

Here are some of the major trends in Information and Communications Technology (ICT) in 2023 and beyond:

Cloud computing: With more and more companies moving their IT infrastructure to the cloud, the demand for cloud services is expected to increase. Cloud storage, cloud computing, and cloud networks are some of the key areas of cloud computing.

1.Big data: Big data refers to the collection, storage, and analysis of large amounts of data. With the increasing amount of data generated by devices and sensors, big data is becoming more important.

2.Artificial intelligence (AI) and automation: AI and automation technologies such as machine learning, deep learning, and natural language processing are revolutionizing various industries.

3.Internet of Things (IoT): IoT refers to the network of physical devices, vehicles, home appliances, and other objects that are embedded with sensors, software, and other technologies for the purpose of connecting and exchanging data.

4.Cybersecurity: With the increasing reliance on technology, cybersecurity is becoming more and more important. Organizations and governments are investing heavily in cybersecurity to protect their digital infrastructure and data.

5.5G technology: 5G is the fifth generation of wireless networks, which promise faster data transfer, higher bandwidth, and lower latency. This will enable new applications and technologies such as the Internet of Things (IoT), autonomous vehicles, and augmented reality.

6.Mixed reality: Mixed reality combines the physical and digital worlds by overlaying virtual information on the real world. This is enabled by technologies such as augmented reality and virtual reality.

7.Blockchain: Blockchain technology is a decentralized, digital ledger that maintains a secure record of transactions. This has wide-ranging implications for e-commerce, supply chain management, and finance.

8.Quantum computing: Quantum computing is a new type of computing that utilizes the principles of quantum mechanics to perform calculations. This has the potential to solve problems that are currently intractable for classical computers.

9.Smart cities: Smart cities use technology to make urban areas more sustainable, efficient, and inclusive. This includes technologies such as Internet of Things (IoT), connected transportation systems, and intelligent buildings.

2 notes

·

View notes

Text

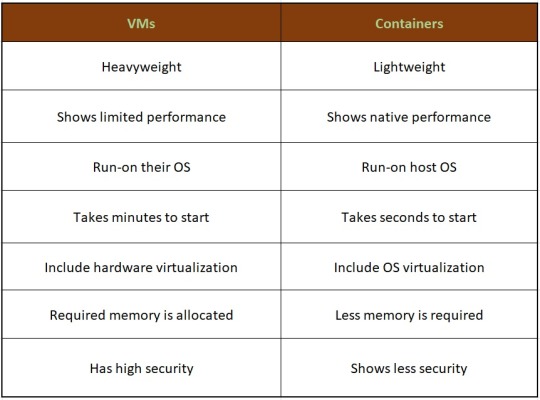

WILL CONTAINER REPLACE HYPERVISOR

As with the increasing technology, the way data centers operate has changed over the years due to virtualization. Over the years, different software has been launched that has made it easy for companies to manage their data operating center. This allows companies to operate their open-source object storage data through different operating systems together, thereby maximizing their resources and making their data managing work easy and useful for their business.

Understanding different technological models to their programming for object storage it requires proper knowledge and understanding of each. The same holds for containers as well as hypervisor which have been in the market for quite a time providing companies with different operating solutions.

Let’s understand how they work

Virtual machines- they work through hypervisor removing hardware system and enabling to run the data operating systems.

Containers- work by extracting operating systems and enable one to run data through applications and they have become more famous recently.

Although container technology has been in use since 2013, it became more engaging after the introduction of Docker. Thereby, it is an open-source object storage platform used for building, deploying and managing containerized applications.

The container’s system always works through the underlying operating system using virtual memory support that provides basic services to all the applications. Whereas hypervisors require their operating system for working properly with the help of hardware support.

Although containers, as well as hypervisors, work differently, have distinct and unique features, both the technologies share some similarities such as improving IT managed service efficiency. The profitability of the applications used and enhancing the lifecycle of software development.

And nowadays, it is becoming a hot topic and there is a lot of discussion going on whether containers will take over and replace hypervisors. This has been becoming of keen interest to many people as some are in favor of containers and some are with hypervisor as both the technologies have some particular properties that can help in solving different solutions.

Let’s discuss in detail and understand their functioning, differences and which one is better in terms of technology?

What are virtual machines?

Virtual machines are software-defined computers that run with the help of cloud hosting software thereby allowing multiple applications to run individually through hardware. They are best suited when one needs to operate different applications without letting them interfere with each other.

As the applications run differently on VMs, all applications will have a different set of hardware, which help companies in reducing the money spent on hardware management.

Virtual machines work with physical computers by using software layers that are light-weighted and are called a hypervisor.

A hypervisor that is used for working virtual machines helps in providing fresh service by separating VMs from one another and then allocating processors, memory and storage among them. This can be used by cloud hosting service providers in increasing their network functioning on nodes that are expensive automatically.

Hypervisors allow host machines to have different operating systems thereby allowing them to operate many virtual machines which leads to the maximum use of their resources such as bandwidth and memory.

What is a container?

Containers are also software-defined computers but they operate through a single host operating system. This means all applications have one operating center that allows it to access from anywhere using any applications such as a laptop, in the cloud etc.

Containers use the operating system (OS) virtualization form, that is they use the host operating system to perform their function. The container includes all the code, dependencies and operating system by itself allowing it to run from anywhere with the help of cloud hosting technology.

They promised methods of implementing infrastructure requirements that were streamlined and can be used as an alternative to virtual machines.

Even though containers are known to improve how cloud platforms was developed and deployed, they are still not as secure as VMs.

The same operating system can run different containers and can share their resources and they further, allow streamlining of implemented infrastructure requirements by the system.

Now as we have understood the working of VMs and containers, let’s see the benefits of both the technologies

Benefits of virtual machines

They allow different operating systems to work in one hardware system that maintains energy costs and rack space to cooling, thereby allowing economical gain in the cloud.

This technology provided by cloud managed services is easier to spin up and down and it is much easier to create backups with this system.

Allowing easy backups and restoring images, it is easy and simple to recover from disaster recovery.

It allows the isolated operating system, hence testing of applications is relatively easy, free and simple.

Benefits of containers:

They are light in weight and hence boost significantly faster as compared to VMs within a few seconds and require hardware and fewer operating systems.

They are portable cloud hosting data centers that can be used to run from anywhere which means the cause of the issue is being reduced.

They enable micro-services that allow easy testing of applications, failures related to the single point are reduced and the velocity related to development is increased.

Let’s see the difference between containers and VMs

Hence, looking at all these differences one can make out that, containers have added advantage over the old virtualization technology. As containers are faster, more lightweight and easy to manage than VMs and are way beyond these previous technologies in many ways.

In the case of hypervisor, virtualization is performed through physical hardware having a separate operating system that can be run on the same physical carrier. Hence each hardware requires a separate operating system to run an application and its associated libraries.

Whereas containers virtualize operating systems instead of hardware, thereby each container only contains the application, its library and dependencies.

Containers in a similar way to a virtual machine will allow developers to improve the CPU and use physical machines' memory. Containers through their managed service provider further allow microservice architecture, allowing application components to be deployed and scaled more granularly.

As we have seen the benefits and differences between the two technologies, one must know when to use containers and when to use virtual machines, as many people want to use both and some want to use either of them.

Let’s see when to use hypervisor for cases such as:

Many people want to continue with the virtual machines as they are compatible and consistent with their use and shifting to containers is not the case for them.

VMs provide a single computer or cloud hosting server to run multiple applications together which is only required by most people.

As containers run on host operating systems which is not the case with VMs. Hence, for security purposes, containers are not that safe as they can destroy all the applications together. However, in the case of virtual machines as it includes different hardware and belongs to secure cloud software, so only one application will be damaged.

Container’s turn out to be useful in case of,

Containers enable DevOps and microservices as they are portable and fast, taking microseconds to start working.

Nowadays, many web applications are moving towards a microservices architecture that helps in building web applications from managed service providers. The containers help in providing this feature making it easy for updating and redeploying of the part needed of the application.

Containers contain a scalability property that automatically scales containers, reproduces container images and spin them down when they are not needed.

With increasing technology, people want to move to technology that is fast and has speed, containers in this scenario are way faster than a hypervisor. That also enables fast testing and speed recovery of images when a reboot is performed.

Hence, will containers replace hypervisor?

Although both the cloud hosting technologies share some similarities, both are different from each other in one or the other aspect. Hence, it is not easy to conclude. Before making any final thoughts about it, let's see a few points about each.

Still, a question can arise in mind, why containers?

Although, as stated above there are many reasons to still use virtual machines, containers provide flexibility and portability that is increasing its demand in the multi-cloud platform world and the way they allocate their resources.

Still today many companies do not know how to deploy their new applications when installed, hence containerizing applications being flexible allow easy handling of many clouds hosting data center software environments of modern IT technology.

These containers are also useful for automation and DevOps pipelines including continuous integration and continuous development implementation. This means containers having small size and modularity of building it in small parts allows application buildup completely by stacking those parts together.

They not only increase the efficiency of the system and enhance the working of resources but also save money by preferring for operating multiple processes.

They are quicker to boost up as compared to virtual machines that take minutes in boosting and for recovery.

Another important point is that they have a minimalistic structure and do not need a full operating system or any hardware for its functioning and can be installed and removed without disturbing the whole system.

Containers replace the patching process that was used traditionally, thereby allowing many organizations to respond to various issues faster and making it easy for managing applications.

As containers contain an operating system abstract that operates its operating system, the virtualization problem that is being faced in the case of virtual machines is solved as containers have virtual environments that make it easy to operate different operating systems provided by vendor management.

Still, virtual machines are useful to many

Although containers have more advantages as compared to virtual machines, still there are a few disadvantages associated with them such as security issues with containers as they belong to disturbed cloud software.

Hacking a container is easy as they are using single software for operating multiple applications which can allow one to excess whole cloud hosting system if breaching occurs which is not the case with virtual machines as they contain an additional barrier between VM, host server and other virtual machines.

In case the fresh service software gets affected by malware, it spreads to all the applications as it uses a single operating system which is not the case with virtual machines.

People feel more familiar with virtual machines as they are well established in most organizations for a long time and businesses include teams and procedures that manage the working of VMs such as their deployment, backups and monitoring.

Many times, companies prefer working with an organized operating system type of secure cloud software as one machine, especially for applications that are complex to understand.

Conclusion

Concluding this blog, the final thought is that, as we have seen, both the containers and virtual machine cloud hosting technologies are provided with different problem-solving qualities. Containers help in focusing more on building code, creating better software and making applications work on a faster note whereas, with virtual machines, although they are slower, less portable and heavy still people prefer them in provisioning infrastructure for enterprise, running legacy or any monolithic applications.

Stating that, if one wants to operate a full operating system, they should go for hypervisor and if they want to have service from a cloud managed service provider that is lightweight and in a portable manner, one must go for containers.

Hence, it will take time for containers to replace virtual machines as they are still needed by many for running some old-style applications and host multiple operating systems in parallel even though VMs has not had so cloud-native servers. Therefore, it can be said that they are not likely to replace virtual machines as both the technologies complement each other by providing IT managed services instead of replacing each other and both the technologies have a place in the modern data center.

For more insights do visit our website

#container #hypervisor #docker #technology #zybisys #godaddy

6 notes

·

View notes

Text

Video Surveillance Hardware System Market: Strategic Developments and Forecast 2025–2032

MARKET INSIGHTS

The global Video Surveillance Hardware System Market size was valued at US$ 23.8 billion in 2024 and is projected to reach US$ 45.6 billion by 2032, at a CAGR of 8.5% during the forecast period 2025-2032. The U.S. market was estimated at USD 14.7 billion in 2024, while China is expected to grow to USD 22.1 billion by 2032.

Video surveillance hardware systems comprise essential components like cameras, storage devices, and monitors that work together to capture, store, and display security footage. These systems have evolved significantly from analog CCTV to advanced IP-based solutions featuring high-definition imaging, AI-powered analytics, and cloud connectivity. The camera segment alone is projected to reach USD 52.8 billion by 2032, growing at 9.1% CAGR.

Market growth is driven by rising security concerns across commercial and residential sectors, government mandates for public safety infrastructure, and technological advancements in AI-based surveillance. Recent developments include Axis Communications’ 2024 launch of thermal cameras with onboard analytics and Hikvision’s partnership with Microsoft to integrate Azure AI into their surveillance ecosystem. Leading players like Bosch Security Systems, Hanwha Techwin, and Avigilon continue to dominate the competitive landscape through innovation in edge computing and 5G-enabled devices.

MARKET DYNAMICS

MARKET DRIVERS

Rising Security Concerns and Crime Rates to Accelerate Video Surveillance Adoption

Global security threats and increasing crime rates are driving significant investments in video surveillance infrastructure. The global security equipment market continues to expand as organizations prioritize asset protection and public safety. Video surveillance systems offer proactive monitoring capabilities that deter criminal activities while providing crucial forensic evidence. Industrial facilities, transportation hubs, and government institutions are particularly investing in advanced surveillance to mitigate risks. This trend is further intensified by geopolitical tensions and the growing need for border security worldwide.

Technological Advancements in AI-Powered Video Analytics to Fuel Market Growth

The integration of artificial intelligence with surveillance hardware is transforming traditional monitoring systems into intelligent security solutions. Modern surveillance cameras now incorporate advanced features such as facial recognition, license plate detection, and behavioral analysis through machine learning algorithms. Edge computing capabilities enable real-time processing directly on cameras, reducing bandwidth requirements while improving response times. These innovations significantly enhance threat detection accuracy and operational efficiency across various sectors.

Moreover, the emergence of 5G networks facilitates high-speed data transmission, enabling more sophisticated remote monitoring applications. Cloud-based video surveillance solutions offer scalable storage and analytics, further driving adoption among SMEs and large enterprises alike.

Government Regulations and Smart City Initiatives to Drive Market Expansion

Governments worldwide are implementing stringent security regulations and investing heavily in smart city projects, creating substantial demand for surveillance hardware. Many countries now mandate video surveillance in public spaces, commercial buildings, and transportation systems. The allocation of substantial budgets for urban security infrastructure demonstrates the strategic importance of surveillance technology in modern governance and public safety management.

➤ For instance, several metropolitan cities have deployed thousands of surveillance cameras as part of comprehensive safe city programs, often integrating them with centralized command centers.

MARKET RESTRAINTS

High Installation and Maintenance Costs to Limit Market Penetration

While surveillance technology offers significant benefits, the substantial capital expenditure required for system deployment poses a major barrier, particularly for small businesses and developing regions. High-quality surveillance hardware demands significant upfront investment, with additional costs for installation, integration, and ongoing maintenance. The total cost of ownership extends beyond equipment to include network infrastructure, storage solutions, and software licensing fees.

Other Restraints

Data Privacy Regulations Stringent data protection laws in various regions create compliance challenges for surveillance system operators. Privacy concerns have led to restrictions on video recording in certain areas, requiring businesses to navigate complex legal frameworks when deploying surveillance solutions.

Cybersecurity Vulnerabilities The increasing connectivity of surveillance equipment exposes systems to potential cyber threats, deterring some organizations from adoption. Networked cameras and connected devices can become entry points for security breaches if not properly secured.

MARKET CHALLENGES

Integration Complexities with Legacy Systems to Pose Implementation Challenges

Many organizations face technical difficulties when upgrading or expanding existing surveillance infrastructure. Compatibility issues between new hardware and older systems often require additional investments in interfaces or complete system replacements. The migration to IP-based solutions from analog systems presents particular challenges in terms of network readiness and staff training.

Other Challenges

Storage Management The exponential growth in video data volume creates storage capacity and management challenges, requiring innovative compression technologies and efficient data retention policies.

False Alarm Rates Advanced analytics systems sometimes generate false alerts due to environmental factors or algorithm limitations, potentially reducing operational efficiency and user confidence.

MARKET OPPORTUNITIES

Expansion of IoT and Edge Computing to Create New Growth Avenues

The convergence of surveillance technology with IoT ecosystems presents significant opportunities for market players. Smart sensors and edge devices enable more distributed and intelligent security architectures. The ability to process video data locally reduces bandwidth requirements while enabling faster response times—particularly valuable for time-sensitive applications.

Emerging Applications in Retail Analytics and Business Intelligence

Beyond security, video surveillance hardware is finding new applications in customer behavior analysis and operational optimization. Retailers leverage advanced camera systems to track foot traffic, analyze shopping patterns, and measure promotional effectiveness. These commercial applications represent a growing revenue stream for surveillance solution providers.

The development of specialized surveillance solutions for vertical markets such as healthcare, education, and manufacturing continues to expand the addressable market for hardware vendors. Customized systems designed for specific industry requirements demonstrate strong growth potential.

VIDEO SURVEILLANCE HARDWARE SYSTEM MARKET TRENDS

AI-Powered Video Analytics Driving Smart Surveillance Adoption

The integration of artificial intelligence (AI) and machine learning (ML) into video surveillance hardware represents one of the most transformative trends in the security industry. Advanced analytics capabilities now enable real-time object detection, facial recognition, and behavioral pattern analysis, significantly enhancing threat detection accuracy. The global market for AI-based surveillance cameras is projected to grow at a CAGR of approximately 22% from 2024 to 2032 as enterprises and governments increasingly adopt these solutions. Edge computing has further accelerated this trend by allowing cameras to process data locally, reducing bandwidth requirements while improving response times for critical security events.

Other Trends

Shift Toward IP-Based Network Cameras